A law was approved by the state of California that banned Tesla from referring to its software as FSD. The DMV of California's assertion that Tesla calling the software FSD constitutes "false advertising" served as the basis for the legislation.

The DMV can revoke the company’s license to produce and sell electric vehicles in California if the new legislation is not followed. Tesla is now required to tell their consumers and educate users about its “FSD” features and its “Autopilot” capabilities, including necessary warnings regarding the features’ limitations.

This means Tesla would also be required to advertise its products via traditional means to follow the DMV’s instructions. A review is being conducted by the DMV and the automobile company is planning to defend itself. Tesla wanted to present a defense and requested a hearing but the DMV’s rule ended up becoming the state law.

Tesla FSD

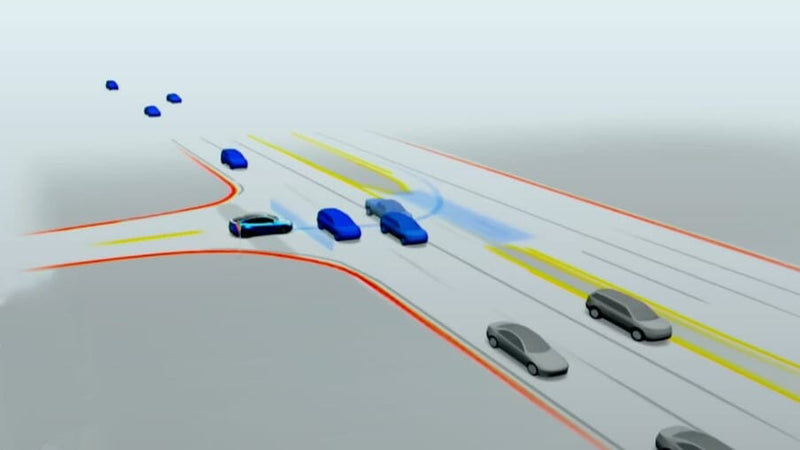

Tesla has been promoting an expensive Full Self-Driving option since 2016. The name of the software package can lead most people to believe that it allows an automobile to drive entirely on its own. However, that is not the case. No automobile that is currently for sale to customers is fully autonomous.

It is against the law for vehicles to be advertised as "self-driving" if they aren't, according to regulations of the California DMV. But such laws have never been put into effect. However, the state legislature bypassed the DMV by enforcing the false advertisement rule a state regulation.

False advertisements concerning self-driving automobiles are a major safety hazard. Tesla’s autopilot, which is a more basic and cheaper version of FSD, has led to several deaths.

It is still unknown how many deaths, injuries, and crashes have been caused by FSD. The nation’s system for crash reporting, divided among states and cities, is not as equipped to identify information which is increasingly important in this day and age of vehicles controlled by software.

Small computers embedded throughout a modern vehicle, like a Tesla, gather and analyze enormous quantities of data that may be transmitted to the manufacturer via cellular and Wi-Fi connections. Tesla has avoided providing such information to safety researchers or regulators.

Pressure from regulators is starting to increase. Investigations concerning the company's safety record, including a history of Tesla vehicles ramming into emergency cars parked by the roadside, are being conducted by the NHTSA. Recently, Tesla was ordered by NHTSA to give detailed information regarding crashes involving self-driving systems.

The new law's effectiveness is not yet evident. The DMV will continue to be in charge of upholding the law.

In California, misleading marketing of autonomous automobiles is already prohibited. But if this law were to pass, it would undoubtedly offer very strong proof of legislative purpose in a way that would matter to a judge or administrative agency of the state.

Tesla's False Advertising

California's DMV claims that Tesla’s FSD and autopilot features are false advertisements designed to mislead consumers.

The DMV stated in the filing from July that if Tesla is found to be in breach, it has the authority to take away its license to manufacture or sell cars in the state of California. The DMV stated that any fines that come as a consequence of the procedure, which is anticipated to take at least a few months, will be far more lenient than that.

The new measure does not focus on the technology being sold and its safety, but rather on how it is advertised. Tesla states in a small text on its website and in manuals that human drivers are required to pay complete attention, whether they are using FSD "beta," software designed to follow traffic signals as it navigates a pre-programmed route, or Tesla's Autopilot that offers automated lane changing and adaptive control. Youtube is filled with videos demonstrating the FSD feature’s work-in-progress nature with traffic violations and dangerous maneuvers.

In addition to outlawing misleading advertising, the measure imposes new guidelines on automakers, requiring them to describe the limits and capabilities of partial automation when a new vehicle is delivered as well as whenever someone updates their software.

A survey in 2018 discovered that around 40 percent of automobile owners with vehicles that offer driver assistance like Autopilot believed that their car can drive itself. Having a state law that requires manufacturers to explain their vehicles’ capabilities and limitations will bridge this knowledge gap.

The American Automobile Association and many automobile companies worked on the language of the bill, which received heavy lobbying from Tesla, despite false advertising already being against the DMV regulations. Still, the advertising persisted for 6 years, on social platforms, through public presentations by Elon Musk, as well as Tesla’s website.

Is there a problem with the term FSD?

It may not be the worst idea to avoid using the phrase "Full Self Driving" until it has been proven to work. (Along with "Autopilot"). This new law, and adding to what Tesla is already doing in regards to educating people, could still save some lives. But the major issue is still not being addressed.

Most users are testing the system knowing it is still under development. For a number of reasons, they are just assuming the role of a guinea pig. (And sometimes using their kids as test subjects.)

Overall, it seems like primarily a feel-good action planned by a sector looking for a reason to shut the severely negligent Tesla down using a strategy that isolates the actual root cause. They do not want Tesla to disrupt their party and hurt more users than them.

This, incidentally, includes a number of businesses falsely claiming to be driverless and declining to offer any evidence of it. Argo, Cruise, and Waymo are all part of it. Tesla hasn't really claimed to be driverless, but these people have. However, the government, industry, and press were all complicit and silent when it happened.

What’s the root cause?

The untenable and needless practice of utilizing humans as Guinea pigs to simulate scenarios for machine learning is the core cause. It needs those crashes and deaths and injuries to learn.

However, the number of variations of objects and scenarios the process requires to keep running, again and again, is extremely vast. Naturally, there is not enough money or time to complete it. This means people will keep dying due to an industry that has convinced everyone they need to do this to help them achieve greatness.

The Full Self Driving automobile industry still has not made the shift to DoD/aerospace simulation from gaming technology to fix the cost and safety issues. It is only because they will have to admit their mistake and can impact future funding.

Tesla making use of a reliable sensor system that includes LiDAR and radar can act as a partial fix for the problem. The issue is that many other vehicles, including AEB, employ the same low-fidelity radar technology that is unable to accurately identify moving or stationary objects. And few employ LiDAR to identify objects and produce tracks similar to a radar.

Another effective solution is to compel Tesla to make its Driver Monitoring alert time quicker. It frequently lasts 20 seconds or longer. For this reason, it is worthless. That is probably the point. After all, it will not be of any benefit to Tesla if a crash is not experienced.